Learning Supported MPC¶

MPC with Hybrid Model of a Bioreactor¶

Introduction¶

This example is meant as a quick guide for solving model predictive control problems with gray-box models. We will see how

to define a model using Hilo modelling framework

to training an Artificial Neural Network using the Hilo-Pythorch wrapper

to easily construct an gray-box model

to setup a Nonlinear Model Predictive Control Problem

This example was used in different publications [1], [2], [3] and [4].

Model¶

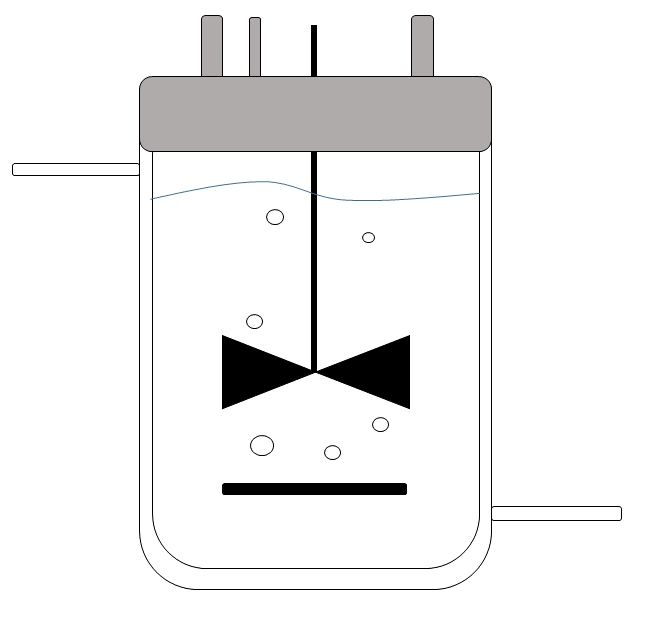

In this example we want to control a continuous bioreactor using a Model Predictive Control. The reactor uses E.Coli for the production of \(\beta\)-galactosidase. The available model that will be used with our MPC is

where \(X,S\) and \(I\) are concentrations of biomass, glucose and inducer in g/l respectively, and \(P\) is the activity of \(\beta\)-galactosidase in in enzyme unit per milliliter. The inputs are the glucose diluition rate \(D_S\) and inducer diluition rate \(D_I\) in 1/h, \(S_F\) is the concentration of glucose in the feed \(F_S\) and \(D = D_S + D_I\).

The tricky part here is that the kinetic rates \(\mu, R_s\) and \(R_{fp}\) are unknown.

Objective¶

The goal is maintaining a setpoing \(P_{ref}=2\) of \(\beta\)-galactosidase using an gray-box model where we learned the reaction rates \(\mu, R_s\) and \(R_{fp}\) from data coming from 5 fedbatch experiments. In this example we will learn the reaction rates using an Artificial Neural Network.

The Model predictive Control problem to solve is

where the unknown reactions rate are substituted by a neural network \(\rho_{w}(x)\). The neural network has 2 features \(S\) and \(I\) and three labels\(\mu, R_s\) and \(R_{fp}\).

Plant model¶

The model used to simulate the plant is instead more complex and contains two extra states the inducer shock factor\(ISF\), and the inducer recovery factor \(IRF\)

with the following reaction rates

Model Builder¶

Import necessary libraries

[2]:

import sys # You don't need this if you installed HILO-MPC using pip!

sys.path.append('../../../') # You don't need this if you installed HILO-MPC using pip!

from hilo_mpc import Model

from hilo_mpc.util.plotting import set_plot_backend

import pandas as pd

# Set plot backend. Can be 'matplotlib' or 'bokeh'

set_plot_backend('bokeh')

# # Ignore deprecation warnings coming from tensorflow

# import warnings

# warnings.filterwarnings("ignore")

Define the model using Hilo Model builder

[3]:

model = Model(plot_backend='bokeh', name='mpc_model')

x = model.set_dynamical_states(['X', 'S', 'P', 'I'])

u = model.set_inputs(['DS', 'DI'])

p = model.set_parameters(['Sf', 'If', 'mu', 'Rs', 'Rfp'])

# Unwrap states

X = x[0]

S = x[1]

P = x[2]

I = x[3]

# Unwrap inputs

DS = u[0]

DI = u[1]

# Unwrap parameters

Sf = p[0]

If = p[1]

# Unknown reaction rates

mu = p[2]

Rs = p[3]

Rfp = p[4]

D_tot = DS + DI

dX = mu * X - D_tot * X

dS = - Rs * X - D_tot * S + DS * Sf

dP = Rfp * X - D_tot * P

dI = - D_tot * I + DI * If

model.set_dynamical_equations([dX, dS, dP, dI])

Neural Network Training¶

The reaction rates are unknown and will be trained using an ANN. First we load the hilo ANN

[4]:

from hilo_mpc import ANN, Layer

then load the data

[5]:

df = pd.read_csv('../../../hilo_mpc/library/data/learning_ecoli/complete_dataset_5_batches.csv', index_col=0).dropna()

df.head()

[5]:

| X | S | P | I | V | time | FeedS | FeedI | Sf | If | mu | Rs | Rfp | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.0 | 0.276405 | 40.065362 | 0.000000 | 0.000000 | 1.000000 | 0.0 | 0.000000 | 0.000000 | 100.0 | 4.0 | 0.404814 | 0.809628 | 0.009200 |

| 0.5 | 0.162451 | 40.041573 | 0.195587 | 0.005323 | 1.000000 | 0.5 | 0.100000 | 0.050000 | 100.0 | 4.0 | 0.404814 | 0.809628 | 0.009200 |

| 1.0 | 0.237256 | 41.693530 | 0.000000 | 0.080970 | 1.075000 | 1.0 | 0.105127 | 0.052564 | 100.0 | 4.0 | 0.402000 | 0.804000 | 0.329145 |

| 1.5 | 0.382575 | 43.638874 | 0.000000 | 0.128871 | 1.153845 | 1.5 | 0.110517 | 0.055259 | 100.0 | 4.0 | 0.394359 | 0.788718 | 0.361241 |

| 2.0 | 0.366413 | 44.761453 | 0.000000 | 0.269108 | 1.236733 | 2.0 | 0.116183 | 0.058092 | 100.0 | 4.0 | 0.384436 | 0.768873 | 0.373405 |

then train the net

[6]:

# Neural network features and labels

features = ['S', 'I'] # states of the actual model

labels = ['mu', 'Rs', 'Rfp'] # unknown parameters

# Create and train neural network

ann = ANN(features, labels)

ann.add_layers(Layer.dense(10, activation='sigmoid'))

# ann.add_layers(Layer.dropout(.2))

ann.setup(save_tensorboard=True, tensorboard_log_dir='./runs/ecoli')

# Add the dataset to the trainer

ann.add_data_set(df)

# Train

ann.train(1, 2000, test_split=.2, patience=100, verbose=0)

c:\Users\bruno\Documents\GitHub\hilo-mpc\docs\docsource\examples\../../..\hilo_mpc\modules\machine_learning\nn\nn.py:322: FutureWarning: The frame.append method is deprecated and will be removed from pandas in a future version. Use pandas.concat instead.

data = self._data_sets[0].append(self._data_sets[1:], ignore_index=True, sort=False)

c:\Users\bruno\Documents\GitHub\hilo-mpc\docs\docsource\examples\../../..\hilo_mpc\plugins\pytorch\wrapper.py:381: UserWarning: Early stopping is only available when validation data is supplied for the training of the neural network

warnings.warn("Early stopping is only available when validation data is supplied for the training of the "

Evaluate on test data

Creating the hybrid model¶

Now that the NN is created we substitute it to the unknown parameters

[7]:

model.substitute_from(ann)

we load the “real plant”. Note that the real plant has 6 states instead of 4

[8]:

from hilo_mpc.library.models import ecoli_D1210_conti

plant = ecoli_D1210_conti(model='complex')

plant.setup(dt=1)

x0_plant = [0.1, 40, 0, 0, 1, 0]

plant.set_initial_conditions(x0_plant)

Model Predictive Control¶

We are ready to put everying in the control loop.

[9]:

from hilo_mpc import NMPC, SimpleControlLoop

model.setup(dt=1)

nmpc = NMPC(model)

nmpc.quad_stage_cost.add_states(names='P', ref=2., weights=10.)

nmpc.quad_terminal_cost.add_states(names='P', ref=2., weights=10.)

nmpc.horizon = 10

nmpc.set_box_constraints(x_lb=[0., 0., 0., 0.], u_lb=[0, 0])

nmpc.set_initial_guess(x_guess=[.1, 40., 0., 0.], u_guess=[0, 0])

nmpc.setup(options={'print_level':0})

We can have print the problem summary as follows

[10]:

print(nmpc)

=================================================================

Nonlinear Model Predictive Control

-----------------------------------------------------------------

Prediction horizon: 10

Control horizon: 10

Model name: mpc_model

Sampling interval: 1

-----------------------------------------------------------------

Objective function

-----------------------------------------------------------------

Lagrange (stage) cost:

@1=2, ((P-@1)*(10*(P-@1)))

Mayor (terminal) cost:

@1=2, ((P-@1)*(10*(P-@1)))

-----------------------------------------------------------------

Box constraints

-----------------------------------------------------------------

States

+-------+-----+-----+

| State | LB | UB |

+-------+-----+-----+

| X | 0.0 | inf |

| S | 0.0 | inf |

| P | 0.0 | inf |

| I | 0.0 | inf |

+-------+-----+-----+

Inputs

+-------+-----+-----+

| Input | LB | UB |

+-------+-----+-----+

| DS | 0.0 | inf |

| DI | 0.0 | inf |

+-------+-----+-----+

-----------------------------------------------------------------

Initial guesses

-----------------------------------------------------------------

States

+-------+-------+

| State | Guess |

+-------+-------+

| X | 0.1 |

| S | 40.0 |

| P | 0.0 |

| I | 0.0 |

+-------+-------+

Inputs

+-------+-------+

| Input | Guess |

+-------+-------+

| DS | 0.0 |

| DI | 0.0 |

+-------+-------+

-----------------------------------------------------------------

Numerical solution and optimization stats

-----------------------------------------------------------------

Integration: collocation method.

NLP solver: ipopt.

Number of optimization variables: 184.

=================================================================

Now we run the simulation. One way is to use the SimpleControlLoop class. This allows us to run the simulation and plot in a very compact way. NOTE this functionality is still in beta version and can be subject to changes

[12]:

control_loop = SimpleControlLoop(plant, nmpc)

[13]:

control_loop.run(100,p=[100,4])

control_loop.plot(output_notebook=True)

[13]:

'C:\\Users\\bruno\\AppData\\Local\\Programs\\Python\\Python39\\lib\\runpy.py'

Alternativelly, we can do it in a much more “manual” way.

[14]:

n_steps = 100

p0 = [100,4]

# Reset the solution, since the plant has been run in the SimpleControlLoop class. This is not necessary if

# no simulations with the plant took place.

plant.reset_solution()

plant.set_initial_conditions(x0=x0_plant)

solution_hybrid = plant.solution

for step in range(n_steps):

u = nmpc.optimize(x0_plant[0:4], cp=p0)

plant.simulate(u=u, p = p0)

x0_plant = solution_hybrid['x:f']

and then create the plots manually as well. Here we use bokeh, but any other plotting library can be used

[15]:

from bokeh.io import output_notebook, show

from bokeh.plotting import figure

from bokeh.layouts import gridplot

import numpy as np

output_notebook()

p_tot = []

for state in plant.dynamical_state_names:

p = figure(background_fill_color="#fafafa", width=300, height=300)

p.line(x=np.array(solution_hybrid['t']).squeeze(), y=np.array(solution_hybrid[state]).squeeze(),

legend_label=state, line_width=2)

for i in range(len(nmpc.quad_stage_cost._references_list)):

if state in nmpc.quad_stage_cost._references_list[i]['names']:

position = nmpc.quad_stage_cost._references_list[i]['names'].index(state)

value = nmpc.quad_stage_cost._references_list[i]['ref'][position]

p.line([np.array(solution_hybrid['t'][1]).squeeze(), np.array(solution_hybrid['t'][-1]).squeeze()],

[value, value], legend_label=state + '_ref',

line_dash='dashed', line_color="red", line_width=2)

p.yaxis.axis_label = state

p.xaxis.axis_label = 'time'

p.yaxis.axis_label_text_font_size = "12pt"

p.yaxis.major_label_text_font_size = "12pt"

p.xaxis.major_label_text_font_size = "12pt"

p.xaxis.axis_label_text_font_size = "12pt"

p_tot.append(p)

show(gridplot(p_tot, ncols=2))

Comparing MPC with perfect model¶

We compare the results of the MPC using the gray-box model with the MPC using the real model.

[16]:

plant = ecoli_D1210_conti(model='complex')

plant.setup(dt=1)

x0_plant = [0.1, 40, 0, 0, 1, 0]

plant.set_initial_conditions(x0_plant)

# Initialize MPC

nmpc_real = NMPC(plant)

nmpc_real.quad_stage_cost.add_states(names='P', ref=2., weights=10.)

nmpc_real.quad_terminal_cost.add_states(names='P', ref=2., weights=10.)

nmpc_real.horizon = 10

nmpc_real.set_box_constraints(x_lb=[0., 0., 0., 0., 0, 0], u_lb=[0, 0])

nmpc_real.set_initial_guess(x_guess=[.1, 40., 0., 0., 1, 0], u_guess=[0, 0])

nmpc_real.setup(options={'print_level':0})

solution_real = plant.solution

control_loop = SimpleControlLoop(plant, nmpc_real)

control_loop.run(steps=n_steps, p=p0)

control_loop.plot(output_notebook=True)

[16]:

'C:\\Users\\bruno\\AppData\\Local\\Programs\\Python\\Python39\\lib\\runpy.py'

and then plot both results overlapping

[17]:

output_notebook()

p_tot = []

for state in plant.dynamical_state_names:

p = figure(background_fill_color="#fafafa", width=300, height=300)

p.line(x=np.array(solution_hybrid['t']).squeeze(), y=np.array(solution_hybrid[state]).squeeze(),

legend_label=state, line_width=2)

p.line(x=np.array(solution_real['t']).squeeze(), y=np.array(solution_real[state]).squeeze(),

legend_label=state + '_real', line_width=2, color='green')

for i in range(len(nmpc.quad_stage_cost._references_list)):

if state in nmpc.quad_stage_cost._references_list[i]['names']:

position = nmpc.quad_stage_cost._references_list[i]['names'].index(state)

value = nmpc.quad_stage_cost._references_list[i]['ref'][position]

p.line([np.array(solution_hybrid['t'][1]).squeeze(), np.array(solution_hybrid['t'][-1]).squeeze()],

[value, value], legend_label=state + '_ref',

line_dash='dashed', line_color="red", line_width=2)

p.yaxis.axis_label = state

p.xaxis.axis_label = 'time'

p.yaxis.axis_label_text_font_size = "12pt"

p.yaxis.major_label_text_font_size = "12pt"

p.xaxis.major_label_text_font_size = "12pt"

p.xaxis.axis_label_text_font_size = "12pt"

p_tot.append(p)

show(gridplot(p_tot, ncols=2))